[ad_1]

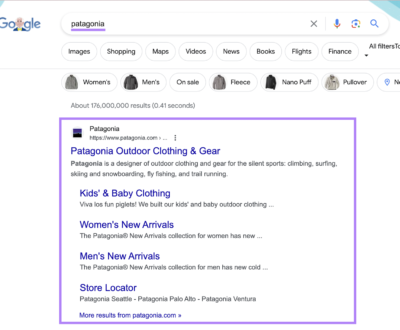

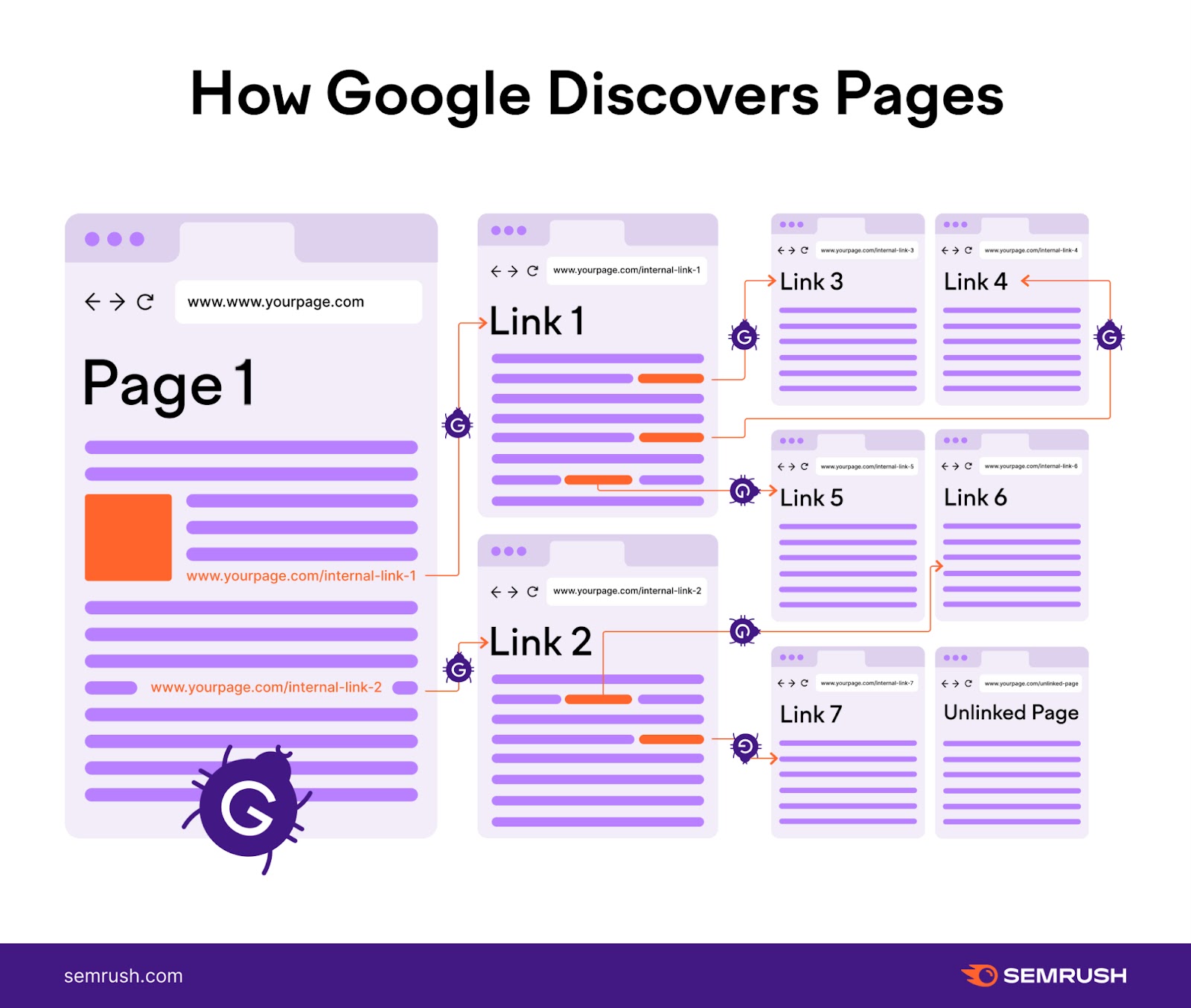

Internet crawlers (additionally referred to as spiders or bots) are applications that go to (or “crawl”) pages throughout the net.

And engines like google use crawlers to find content material that they will then index—that means retailer of their monumental databases.

These applications uncover your content material by following hyperlinks in your web site.

However the course of doesn’t at all times go easily due to crawl errors.

Earlier than we dive into these errors and learn how to handle them, let’s begin with the fundamentals.

What Are Crawl Errors?

Crawl errors happen when search engine crawlers can’t navigate via your webpages the way in which they usually do (proven beneath).

When this happens, engines like google like Google can’t totally discover and perceive your web site’s content material or construction.

This can be a drawback as a result of crawl errors can forestall your pages from being found. Which suggests they will’t be listed, seem in search outcomes, or drive natural (unpaid) site visitors to your web site.

Google separates crawl errors into two classes: web site errors and URL errors.

Let’s discover each.

Web site Errors

Web site errors are crawl errors that may impression your complete web site.

Server, DNS, and robots.txt errors are the most typical.

Server Errors

Server errors (which return a 5xx HTTP standing code) occur when the server prevents the web page from loading.

Listed here are the most typical server errors:

- Inside server error (500): The server can’t full the request. However it will also be triggered when extra particular errors aren’t accessible.

- Unhealthy gateway error (502): One server acts as a gateway and receives an invalid response from one other server

- Service not accessible error (503): The server is at the moment unavailable, normally when the server is beneath restore or being up to date

- Gateway timeout error (504): One server acts as a gateway and doesn’t obtain a response from one other server in time. Like when there’s an excessive amount of site visitors on the web site.

When engines like google continually encounter 5xx errors, they will sluggish a web site’s crawling price.

Meaning engines like google like Google is likely to be unable to find and index all of your content material.

DNS Errors

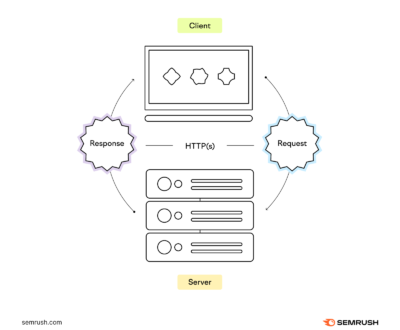

A website title system (DNS) error is when engines like google cannot join along with your area.

All web sites and gadgets have at the very least one web protocol (IP) handle uniquely figuring out them on the net.

The DNS makes it simpler for individuals and computer systems to speak to one another by matching domains to their IP addresses.

With out the DNS, we might manually enter a web site’s IP handle as a substitute of typing its URL.

So, as a substitute of getting into “www.semrush.com” in your URL bar, you would need to use our IP handle: “34.120.45.191.”

DNS errors are much less frequent than server errors. However listed here are those you may encounter:

- DNS timeout: Your DNS server didn’t reply to the search engine’s request in time

- DNS lookup: The search engine couldn’t attain your web site as a result of your DNS server didn’t find your area title

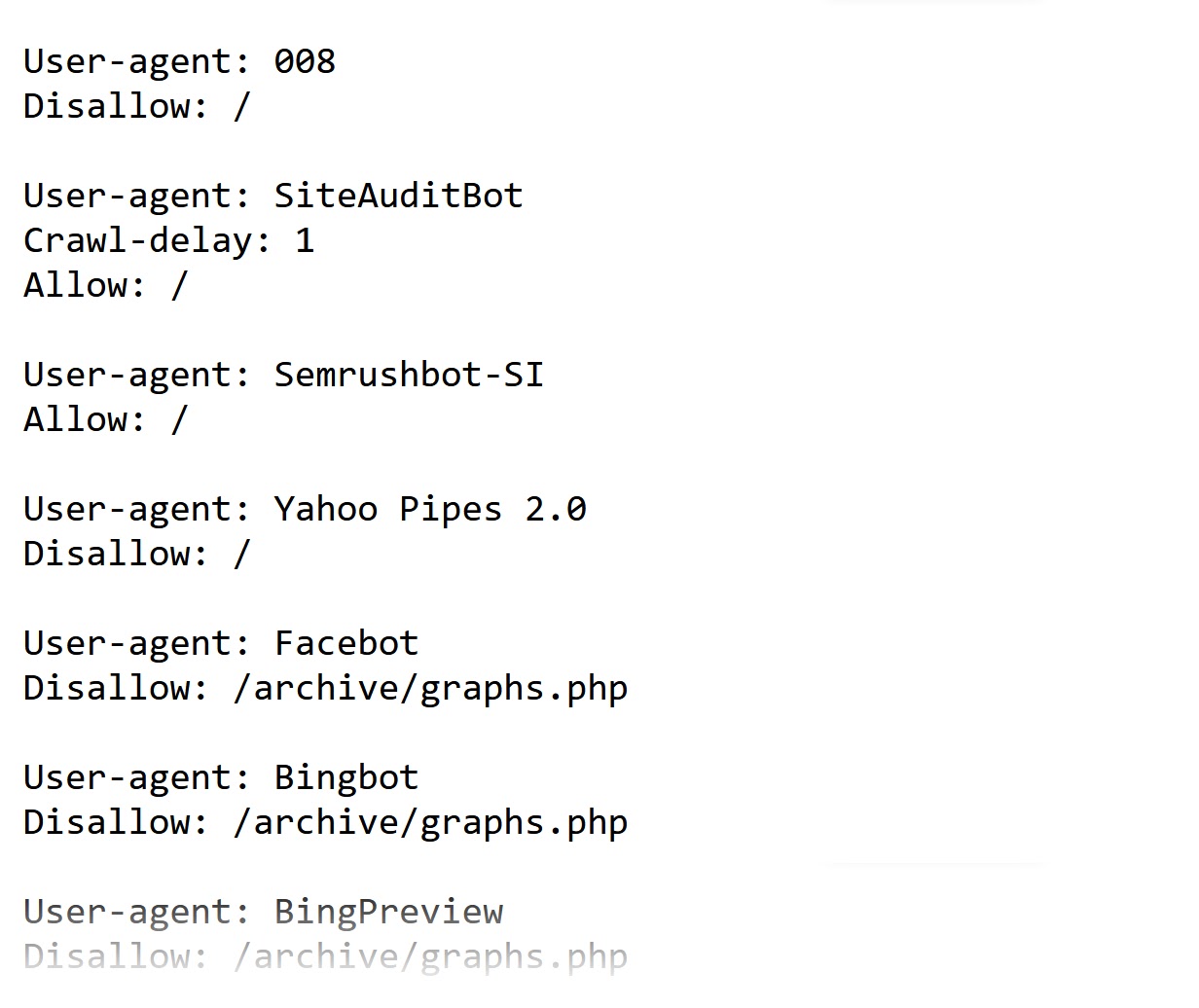

Robots.txt Errors

Robots.txt errors come up when engines like google can’t retrieve your robots.txt file.

Your robots.txt file tells engines like google which pages they will crawl and which they will’t.

Right here’s what a robots.txt file seems to be like.

Listed here are the three primary elements of this file and what every does:

- Consumer-agent: This line identifies the crawler. And “*” signifies that the foundations are for all search engine bots.

- Disallow/enable: This line tells search engine bots whether or not they need to crawl your web site or sure sections of your web site

- Sitemap: This line signifies your sitemap location

URL Errors

In contrast to web site errors, URL errors solely have an effect on the crawlability of particular pages in your web site.

Right here’s an outline of the different sorts:

404 Errors

A 404 error signifies that the search engine bot couldn’t discover the URL. And it’s one of the frequent URL errors.

It occurs when:

- You’ve modified the URL of a web page with out updating previous hyperlinks pointing to it

- You’ve deleted a web page or article out of your web site with out including a redirect

- You may have damaged hyperlinks–e.g., there are errors within the URL

Right here’s what a fundamental 404 web page seems to be like on an Nginx server.

However most firms use customized 404 pages as we speak.

These customized pages enhance the consumer expertise. And help you stay constant along with your web site’s design and branding.

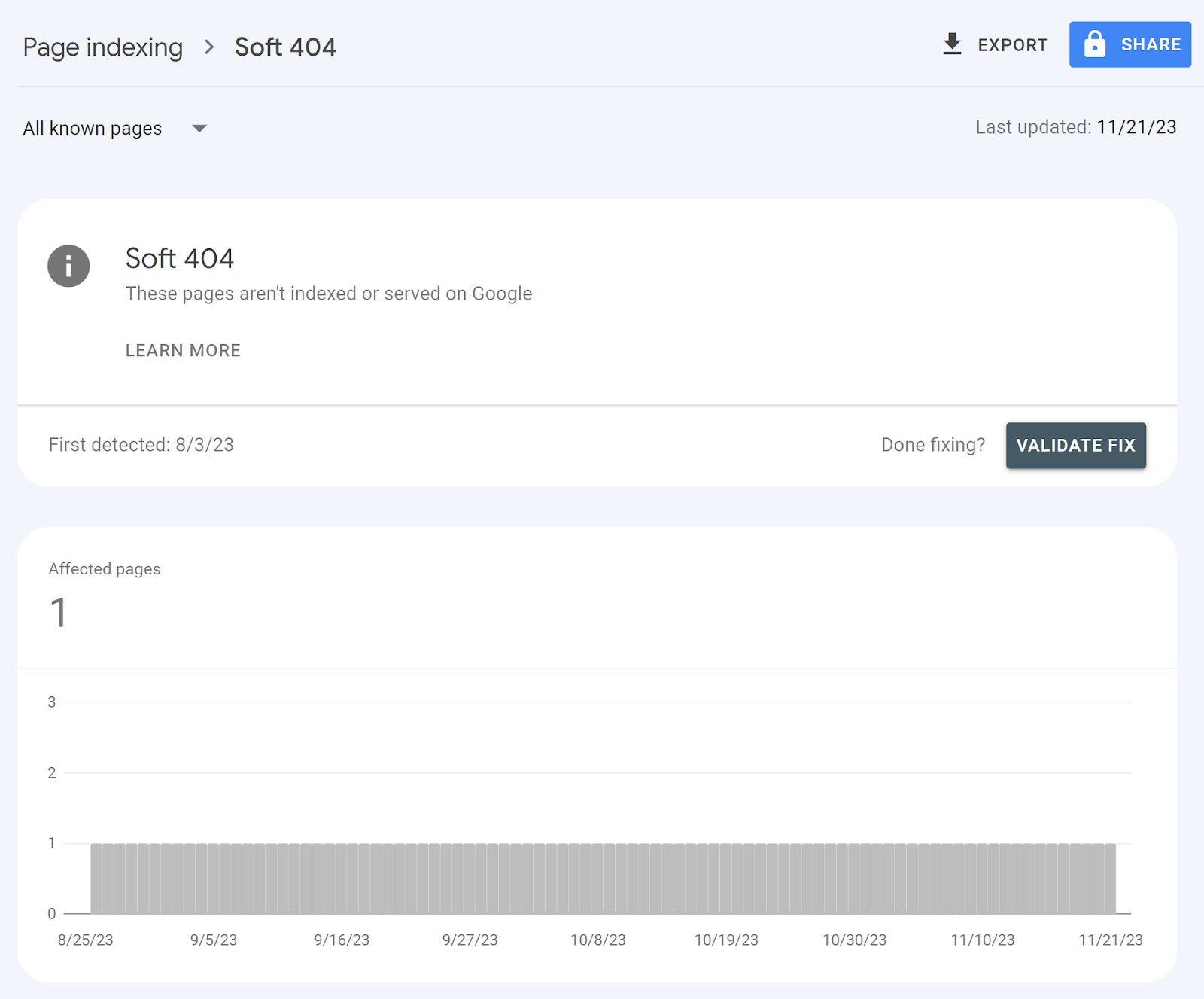

Gentle 404 Errors

Gentle 404 errors occur when the server returns a 200 code however Google thinks it ought to be a 404 error.

The 200 code means all the pieces is OK. It’s the anticipated HTTP response code if there are not any points

So, what causes gentle 404 errors?

- JavaScript file situation: The JavaScript useful resource is blocked or can’t be loaded

- Skinny content material: The web page has inadequate content material that doesn’t present sufficient worth to the consumer. Like an empty inside search outcome web page.

- Low-quality or duplicate content material: The web page isn’t helpful to customers or is a duplicate of one other web page. For instance, placeholder pages that shouldn’t be dwell like those who include “lorem ipsum” content material. Or duplicate content material that doesn’t use canonical URLs—which inform engines like google which web page is the first one.

- Different causes: Lacking recordsdata on the server or a damaged connection to your database

Right here’s what you see in Google Search Console (GSC) once you discover pages with these.

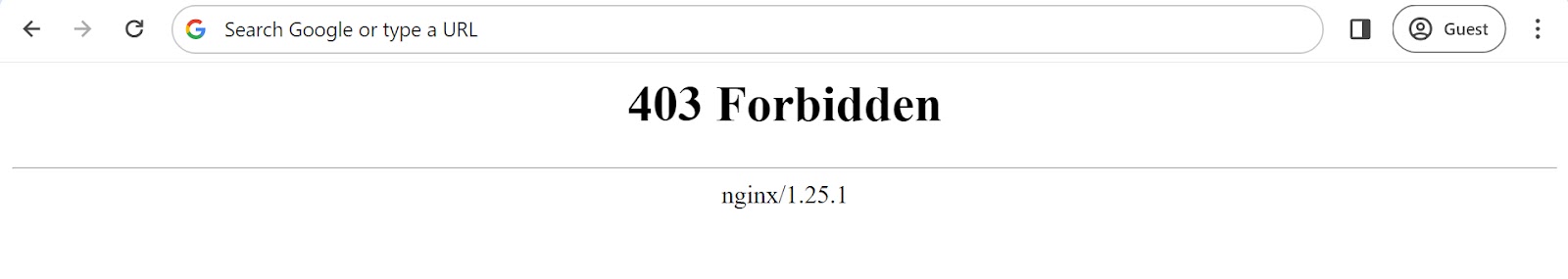

403 Forbidden Errors

The 403 forbidden error means the server denied a crawler’s request. That means the server understood the request, however the crawler isn’t capable of entry the URL.

Right here’s what a 403 forbidden error seems to be like on an Nginx server.

Issues with server permissions are the primary causes behind the 403 error.

Server permissions outline consumer and admins’ rights on a folder or file.

We are able to divide the permissions into three classes: learn, write, and execute.

For instance, you received’t have the ability to entry a URL When you don’t have the learn permission.

A defective .htaccess file is one other recurring explanation for 403 errors.

An .htaccess file is a configuration file used on Apache servers. It’s useful for configuring settings and implementing redirects.

However any error in your .htaccess file may end up in points like a 403 error.

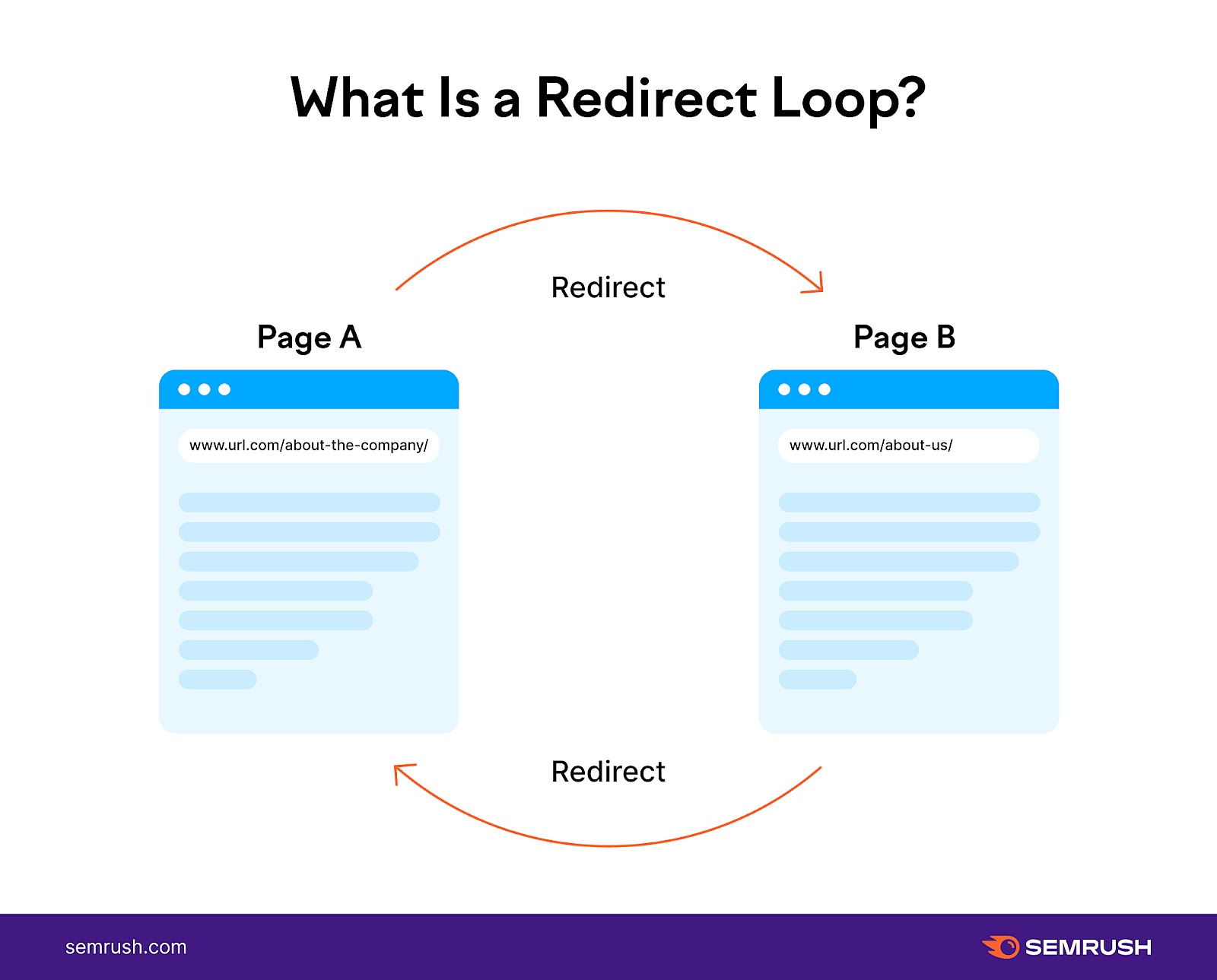

Redirect Loops

A redirect loop occurs when web page A redirects to web page B. And web page B to web page A.

The outcome?

An infinite loop of redirects that forestalls guests and crawlers from accessing your content material. Which may hinder your rankings.

Find out how to Discover Crawl Errors

Web site Audit

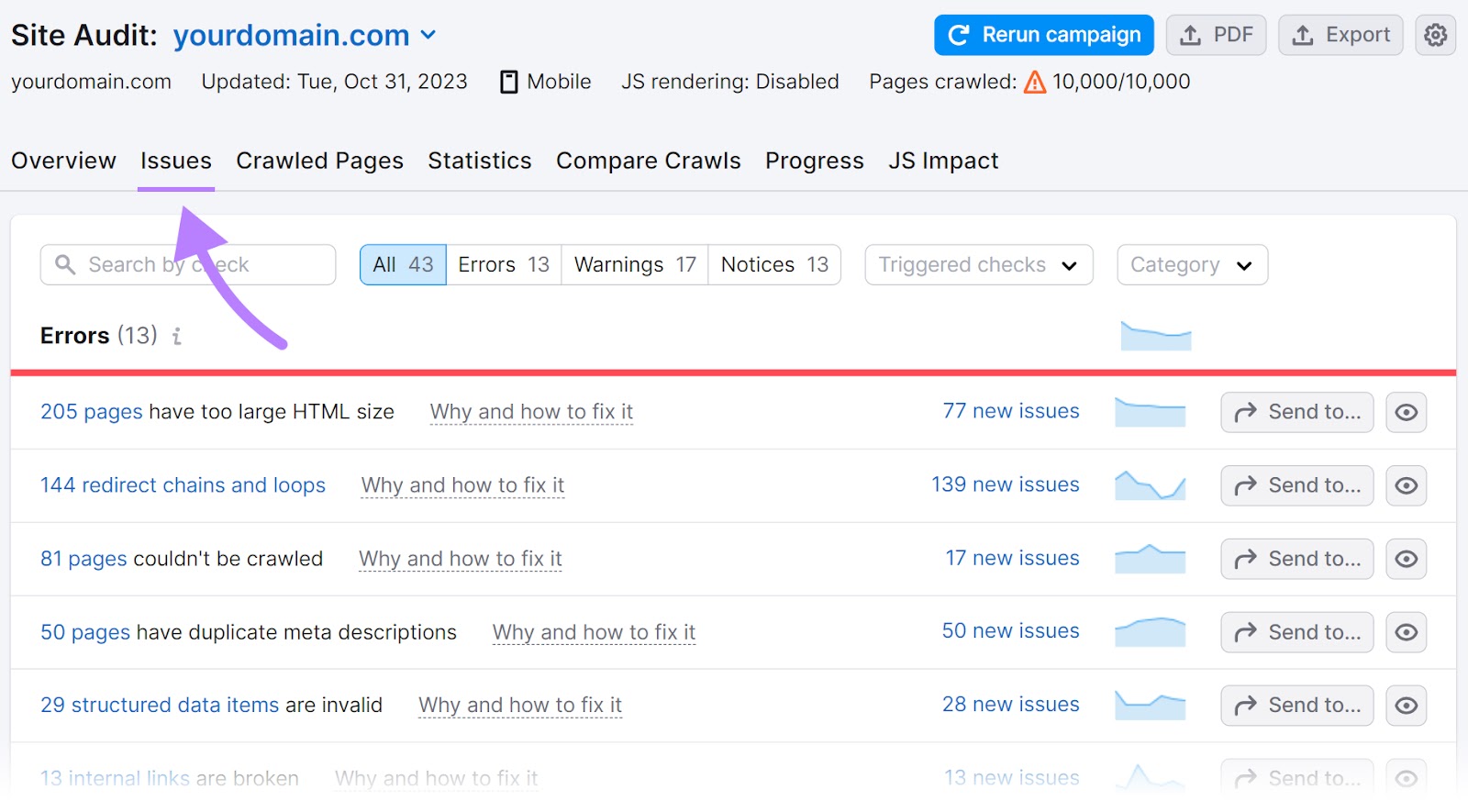

Semrush’s Web site Audit permits you to simply uncover points affecting your web site’s crawlability. And gives strategies on learn how to handle them.

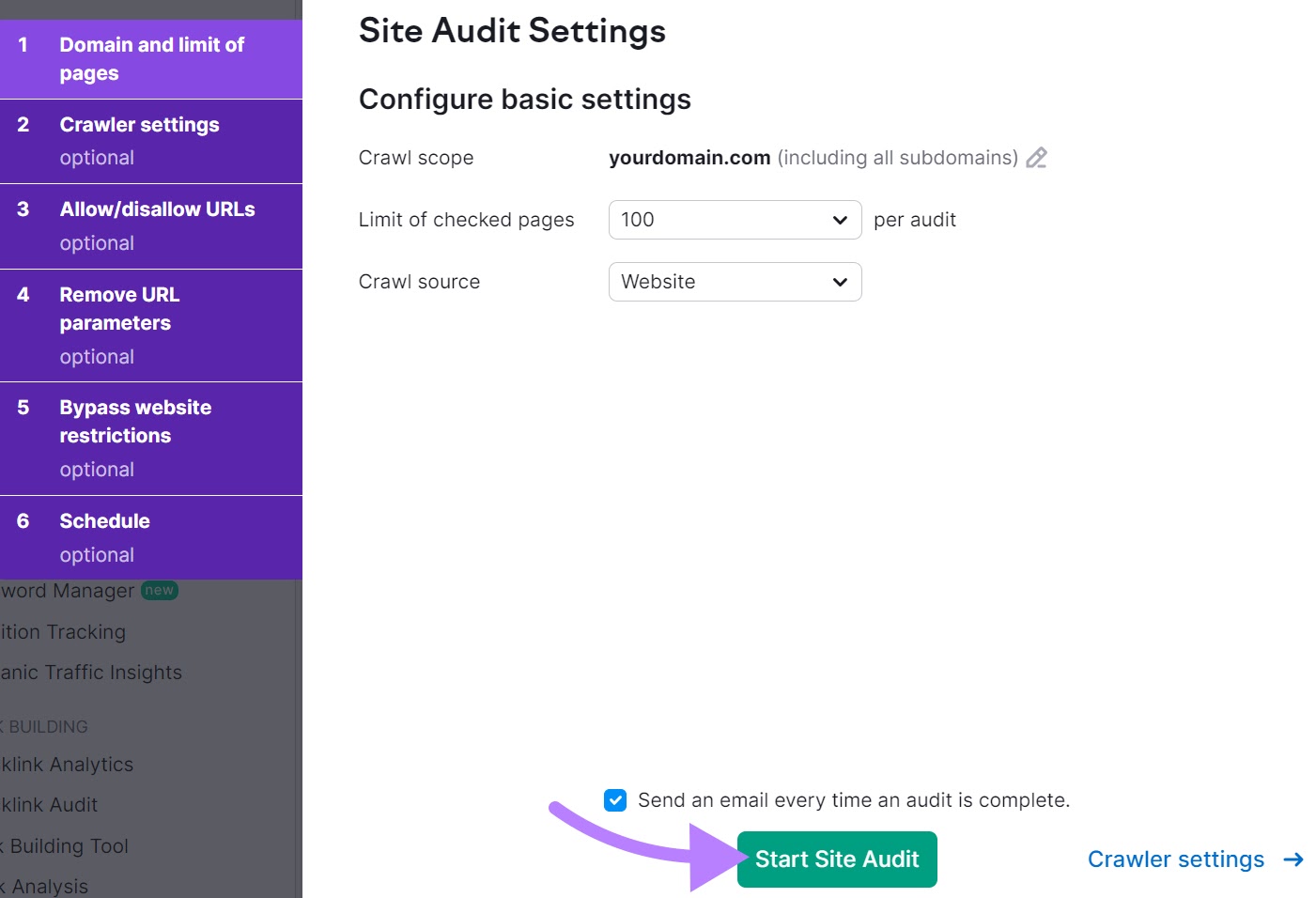

Open the instrument, enter your area title, and click on “Begin Audit.”

Then, observe the Web site Audit configuration information to regulate your settings. And click on “Begin Web site Audit.”

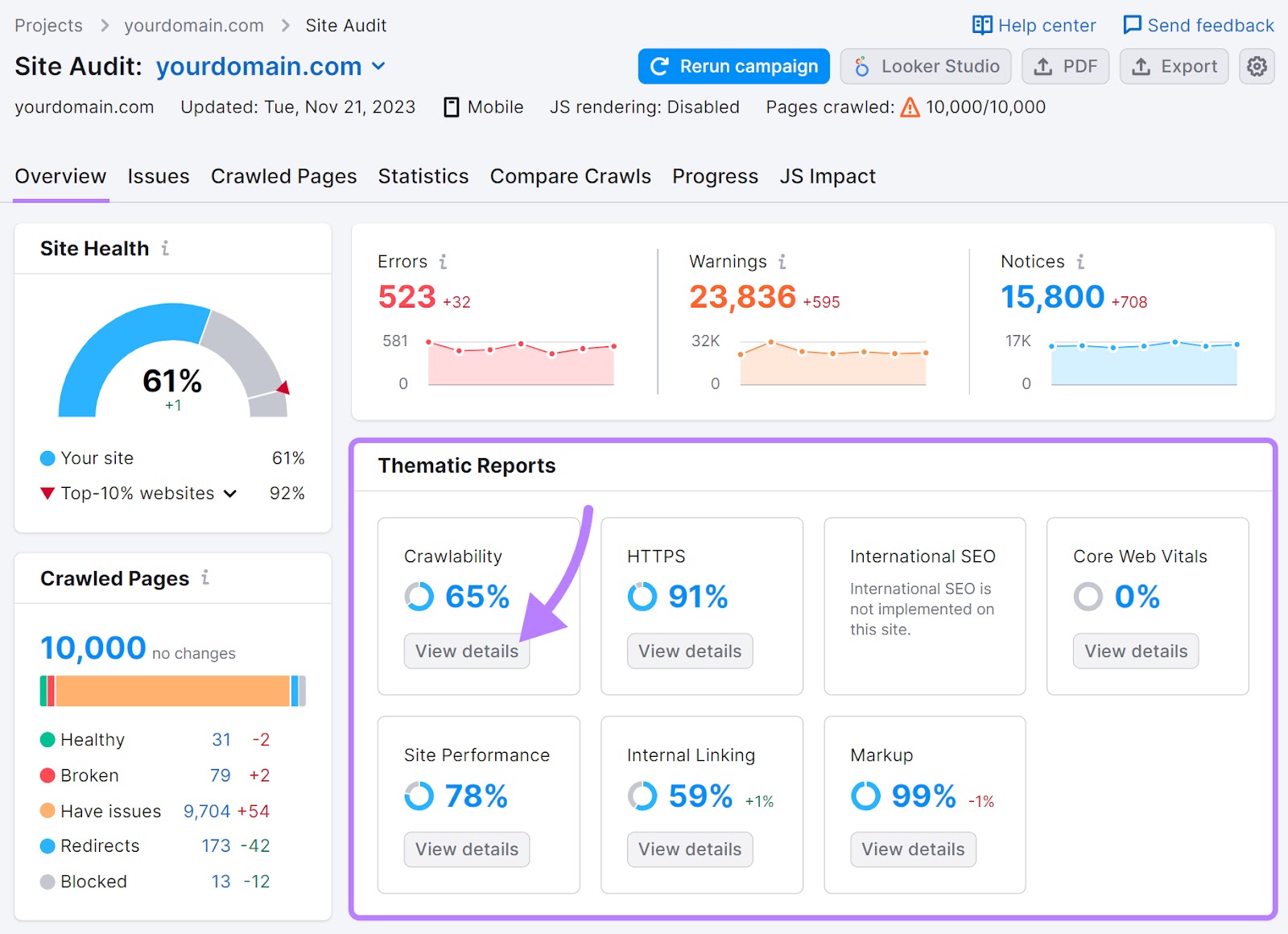

You’ll be taken to the “Overview” report.

Click on on “View particulars” within the “Crawlability” module beneath “Thematic Experiences.”

You’ll get an total understanding of the way you’re doing when it comes to crawl errors.

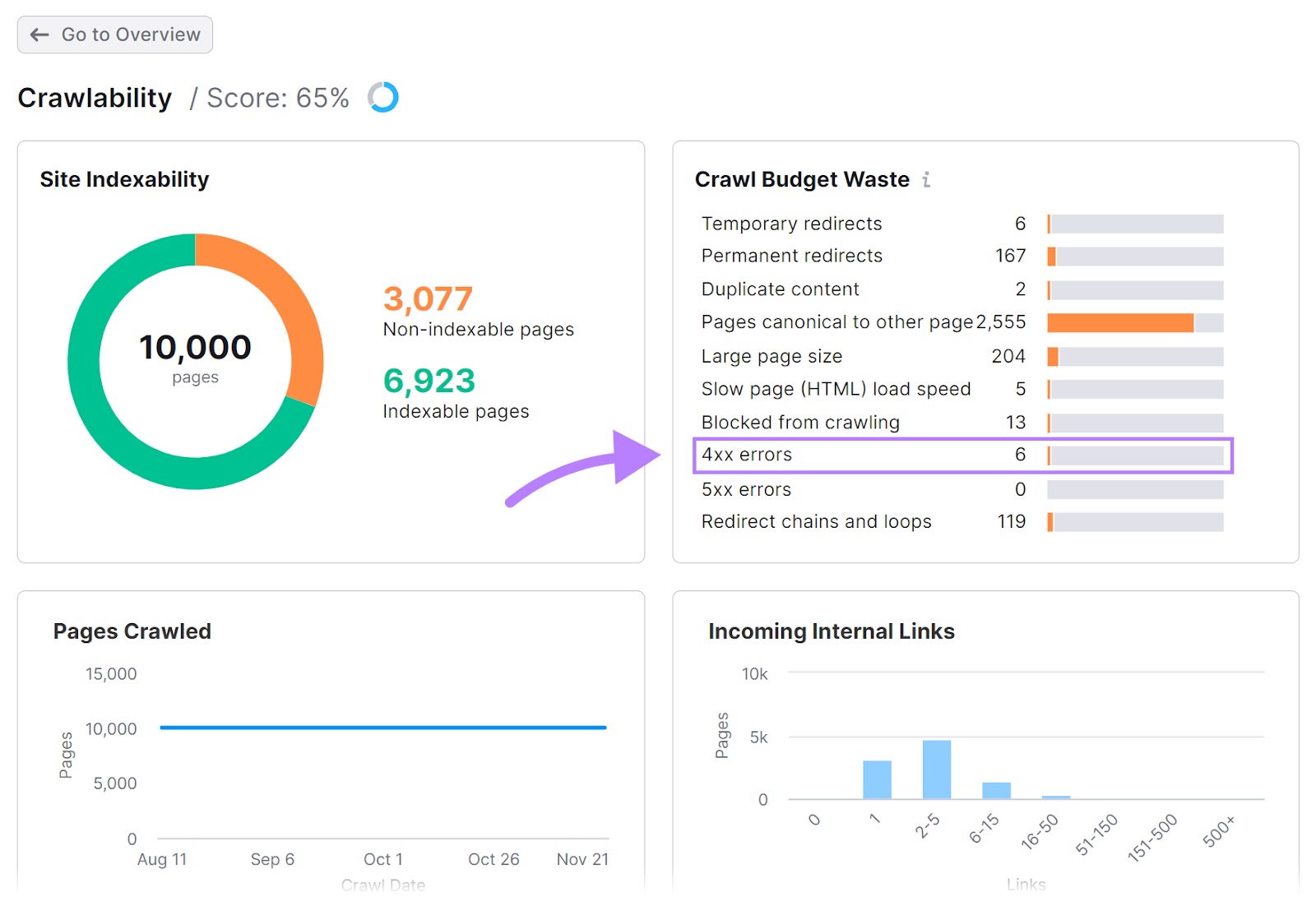

Then, choose a selected error you wish to remedy. And click on on the corresponding bar subsequent to it within the “Crawl Funds Waste” module.

We’ve chosen the 4xx for our instance.

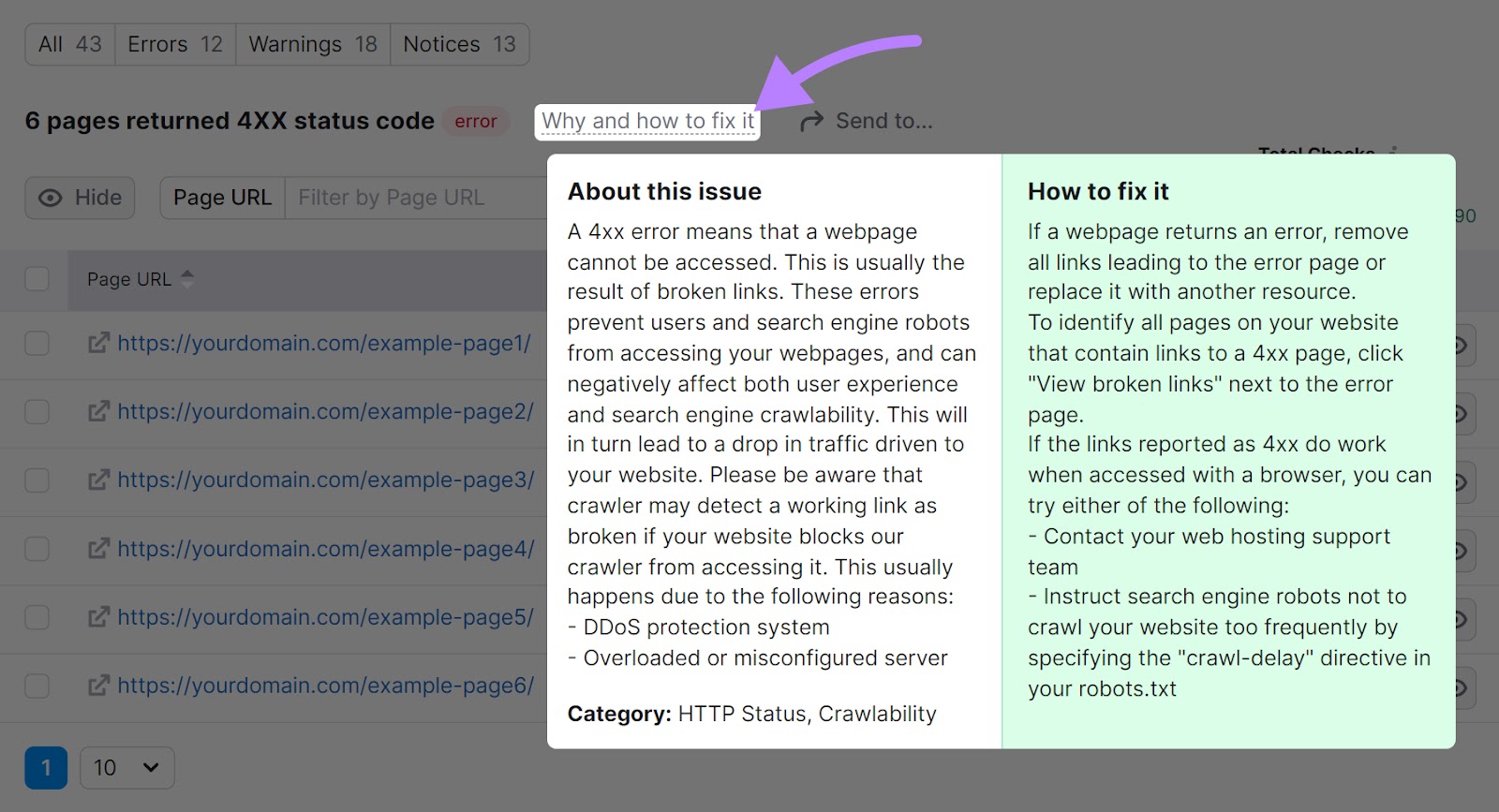

On the subsequent display, click on “Why and learn how to repair it.”

You’ll get data required to know the difficulty. And recommendation on learn how to remedy it.

Google Search Console

Google Search Console can be a superb instrument providing invaluable assist to establish crawl errors.

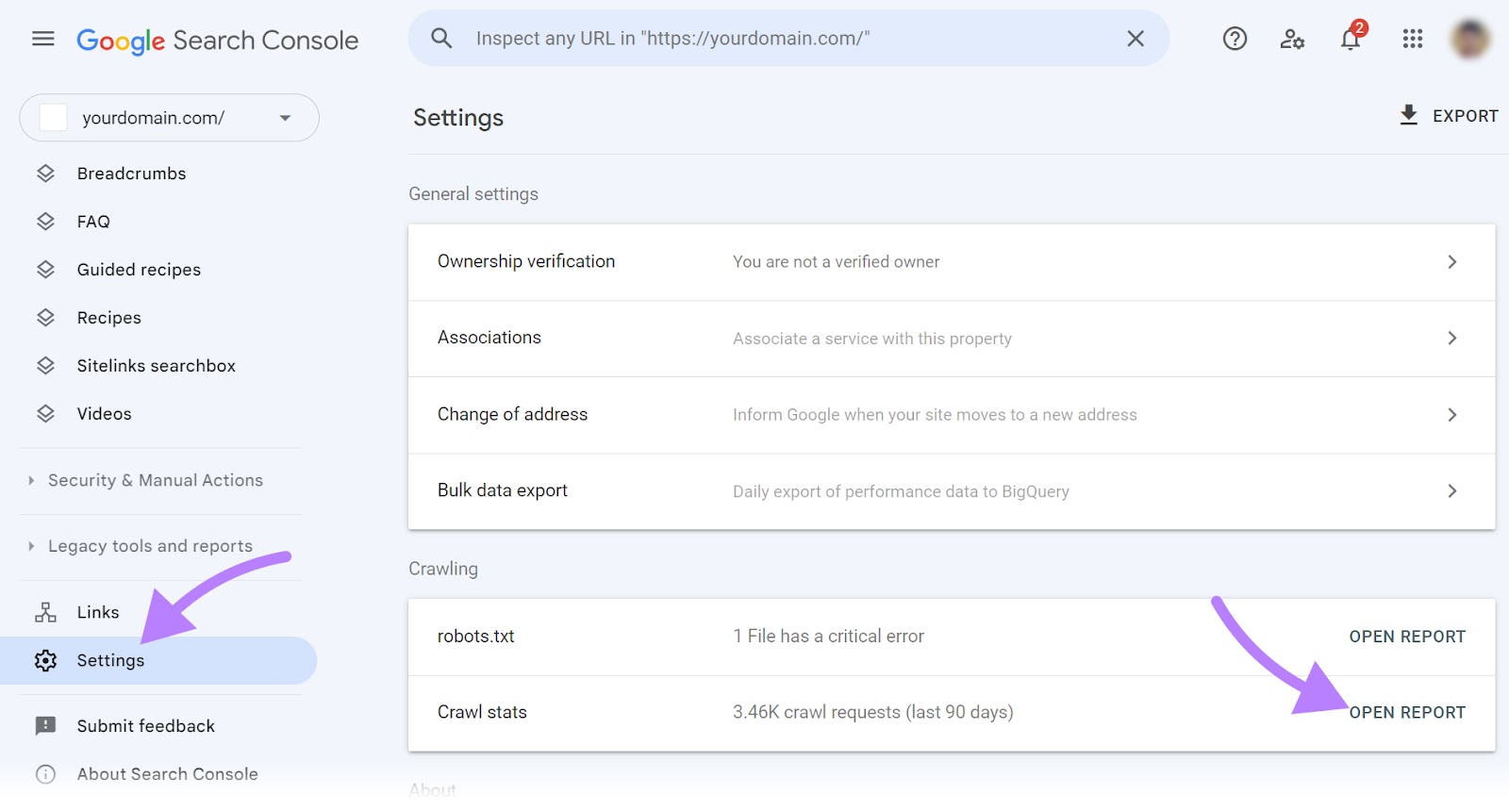

Head to your GSC account and click on on “Settings” on the left sidebar.

Then, click on on “OPEN REPORT” subsequent to the “Crawl stats” tab.

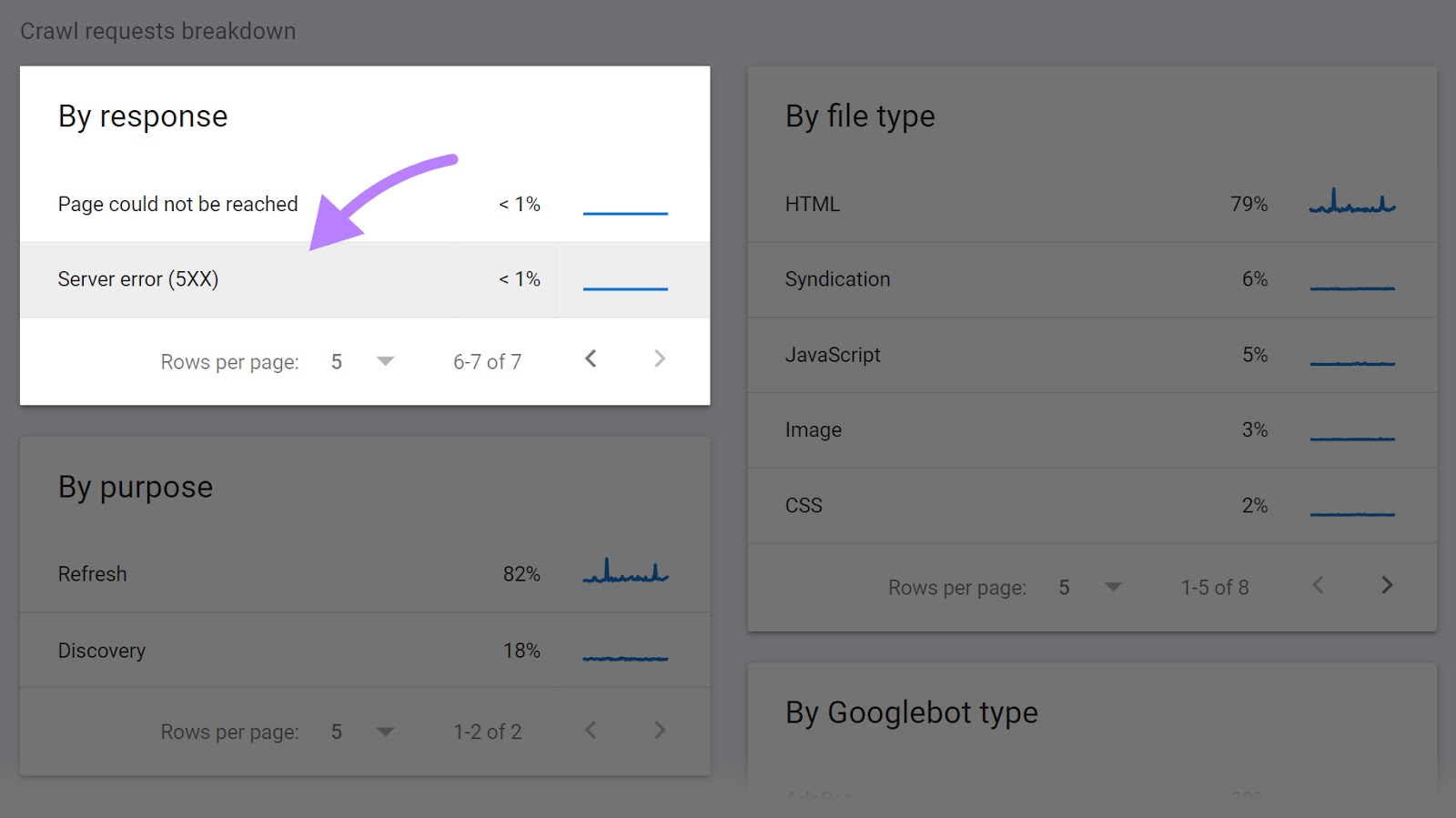

Scroll all the way down to see if Google observed crawling points in your web site.

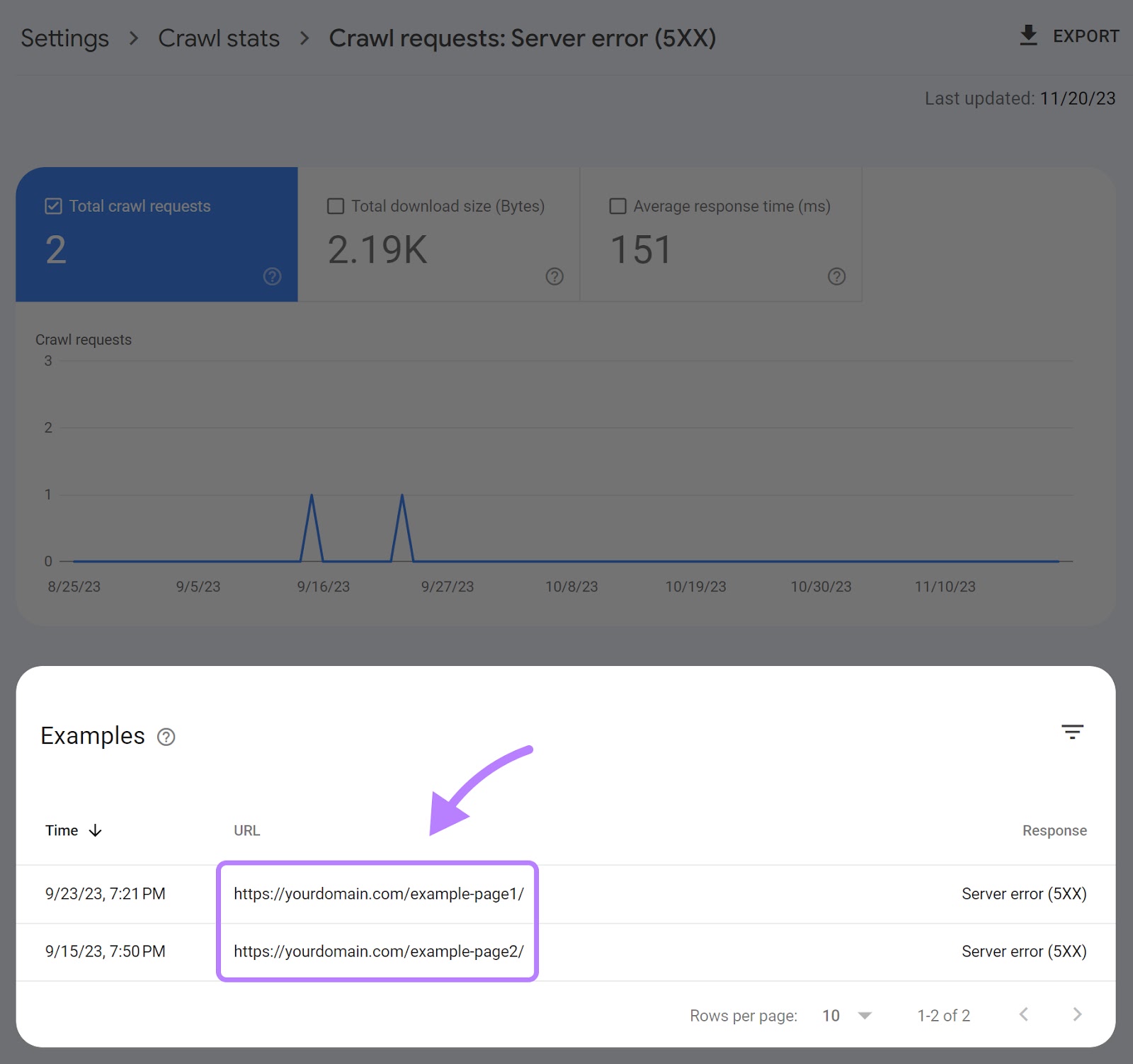

Click on on any situation, just like the 5xx server errors.

You’ll see the total listing of URLs matching the error you chose.

Now, you possibly can handle them one after the other.

Find out how to Repair Crawl Errors

We now know learn how to establish crawl errors.

The subsequent step is healthier understanding learn how to repair them.

Fixing 404 Errors

You’ll in all probability encounter 404 errors steadily. And the excellent news is that they’re simple to repair.

You need to use redirects to repair 404 errors.

Use 301 redirects for everlasting redirects as a result of they help you retain a number of the authentic web page’s authority. And use 302 redirects for short-term redirects.

How do you select the vacation spot URL to your redirects?

Listed here are some finest practices:

- Add a redirect to the brand new URL if the content material nonetheless exists

- Add a redirect to a web page addressing the identical or a extremely comparable subject if the content material now not exists

There are three primary methods to deploy redirects.

The primary technique is to make use of a plugin.

Listed here are a number of the hottest redirect plugins for WordPress:

The second technique is so as to add redirects instantly in your server configuration file.

Right here’s what a 301 redirect would appear to be on an .htaccess file on an Apache server.

Redirect 301 https://www.yoursite.com/old-page/ https://www.yoursite.com/new-page/

You’ll be able to break this line down into 4 elements:

- Redirect: Specifies that we wish to redirect the site visitors

- 301: Signifies the redirect code, stating that it’s a everlasting redirect

- https://www.yoursite.com/old-page/: Identifies the URL to redirect from

- https://www.yoursite.com/new-page/: Identifies the URL to redirect to

We don’t suggest this feature should you’re a newbie. As a result of it may possibly negatively impression your web site should you’re uncertain of what you’re doing. So, be sure to work with a developer should you choose to go this route.

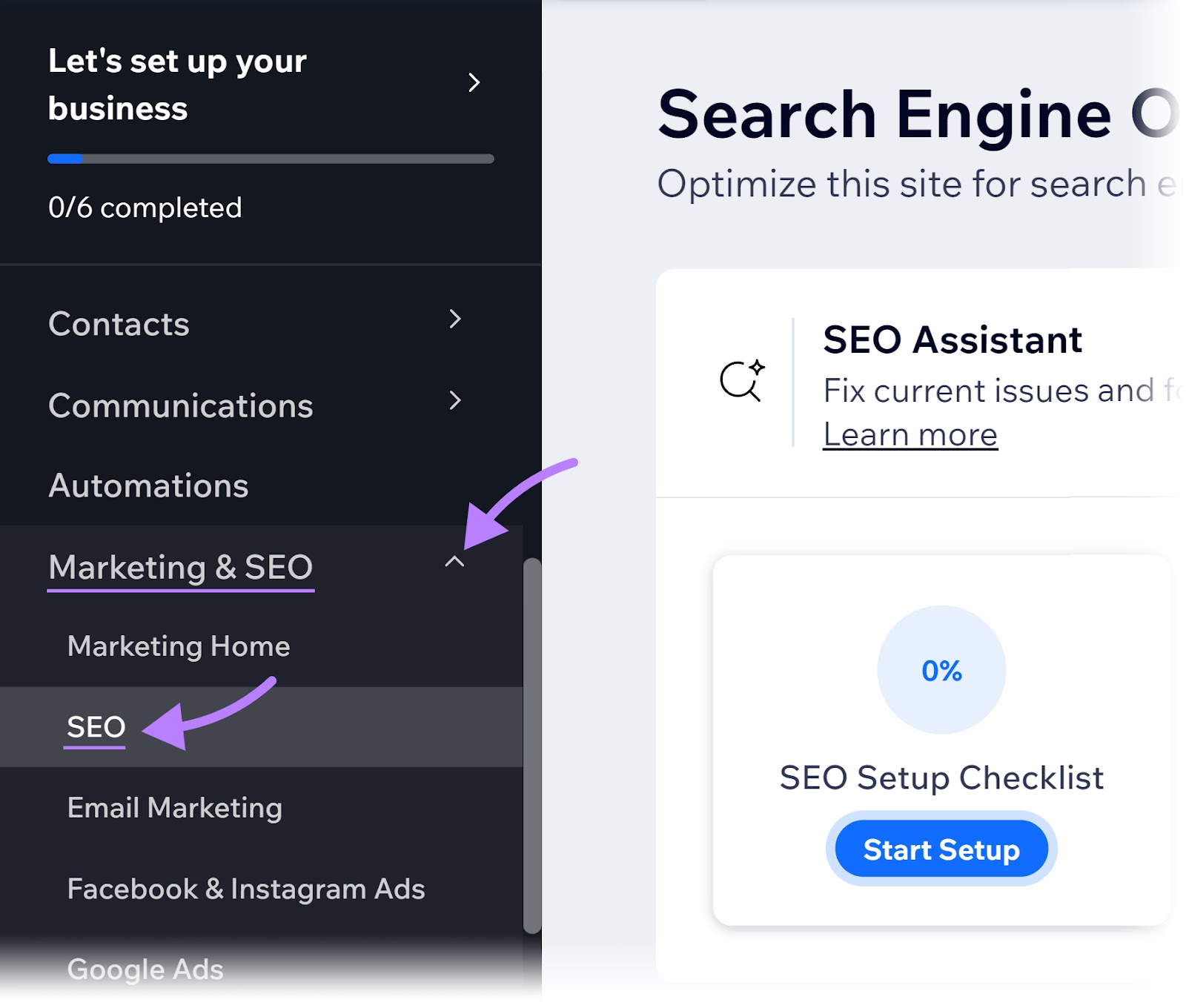

Lastly, you possibly can add redirects instantly from the backend should you use Wix or Shopify.

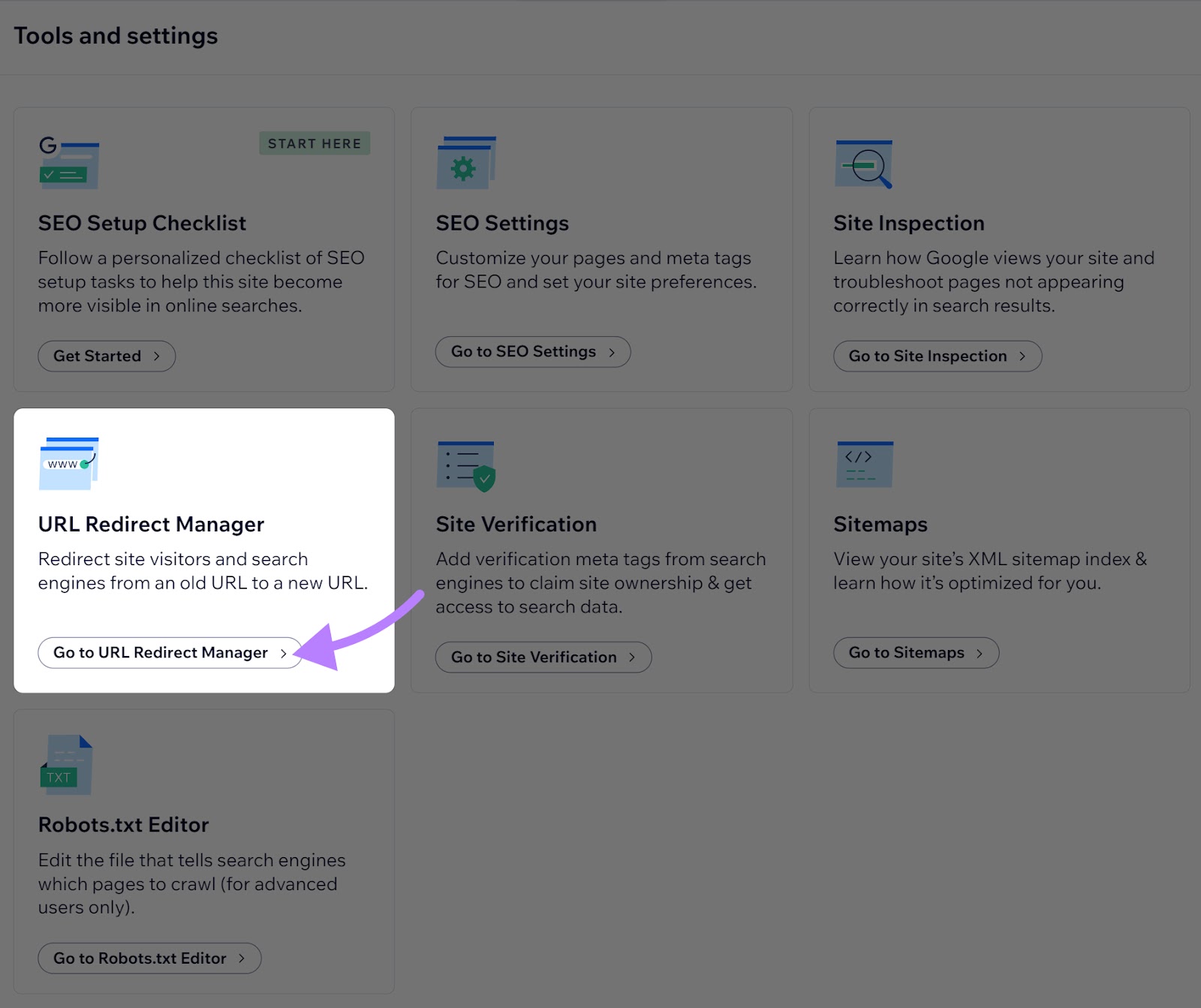

When you’re utilizing Wix, scroll to the underside of your web site management panel. Then click on on “web optimization” beneath “Advertising & web optimization.”

Click on “Go to URL Redirect Supervisor” situated beneath the “Instruments and settings” part.

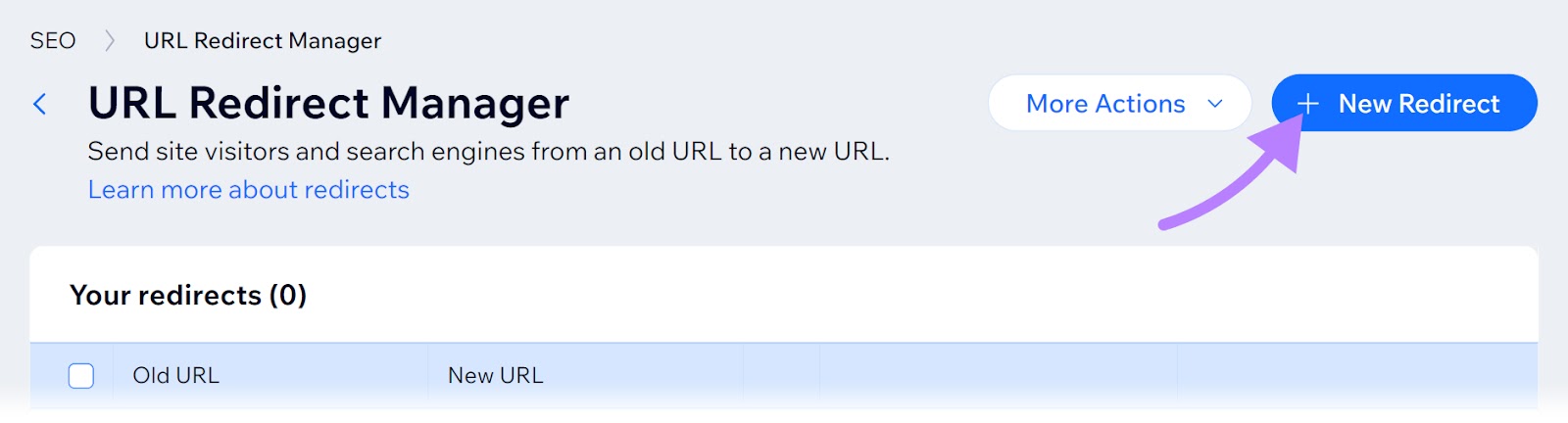

Then, click on the “+ New Redirect” button on the high proper nook.

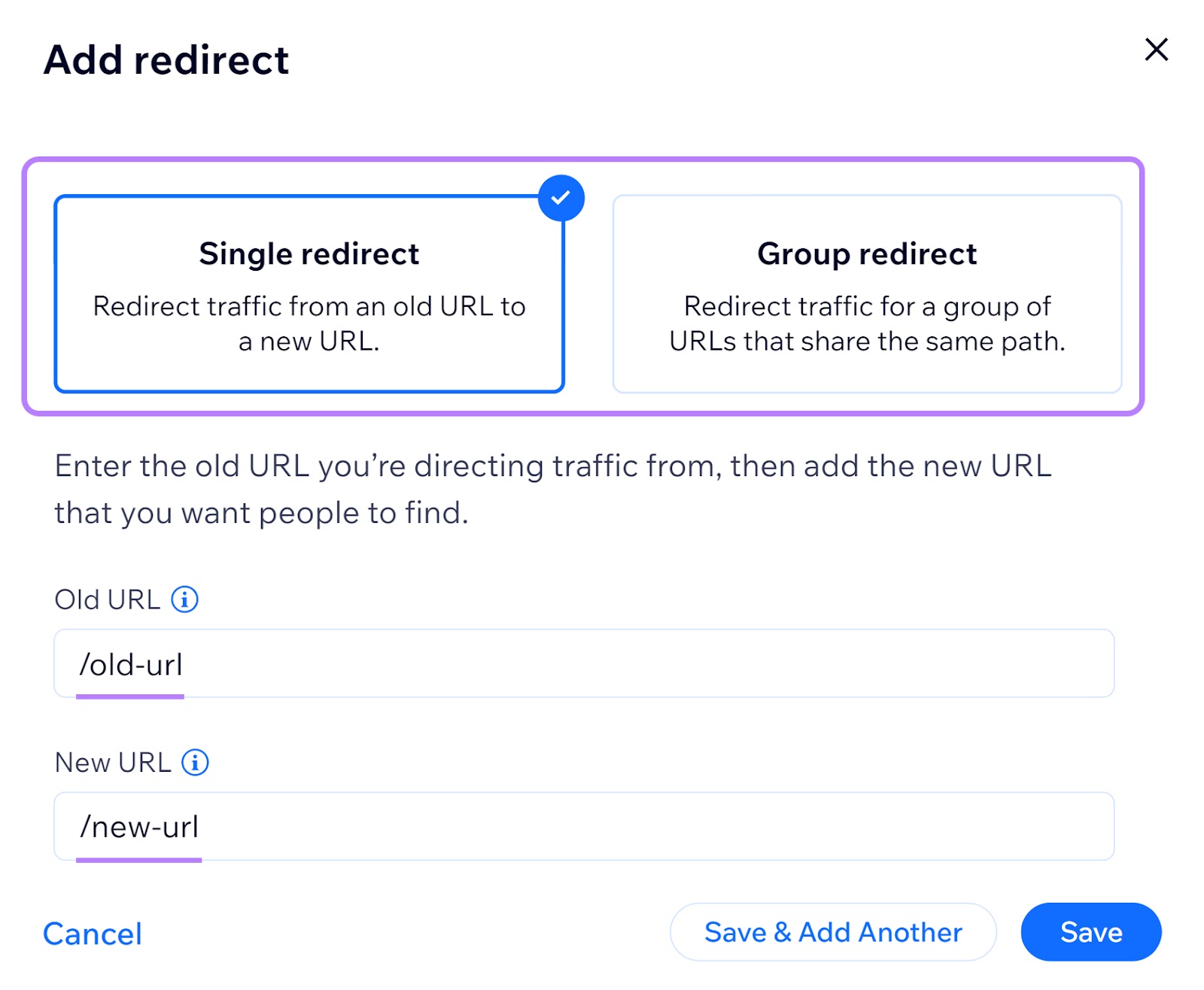

A pop-up window will present. Right here, you possibly can select the kind of redirect, enter the previous URL you wish to redirect from, and the brand new URL you wish to direct to.

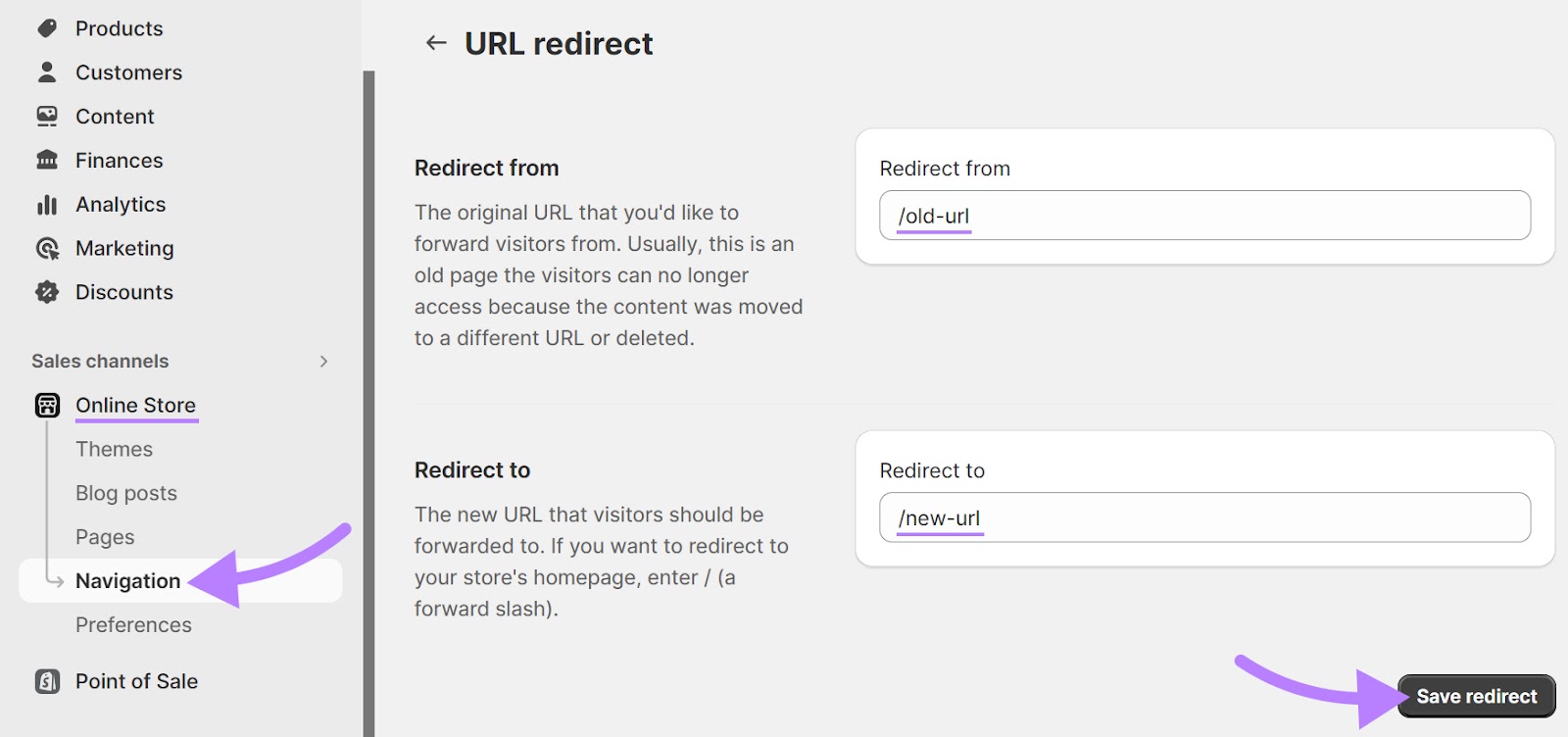

Listed here are the steps to observe should you’re utilizing Shopify:

Log into your account and click on on “On-line Retailer” beneath “Gross sales channels.”

Then, choose “Navigation.”

From right here, go to “View URL Redirects.”

Click on the “Create URL redirect” button.

Enter the previous URL that you simply want to redirect guests from and the brand new URL that you simply wish to redirect your guests to. “Enter “/” to focus on your retailer’s residence web page.)

Lastly, save the redirect.

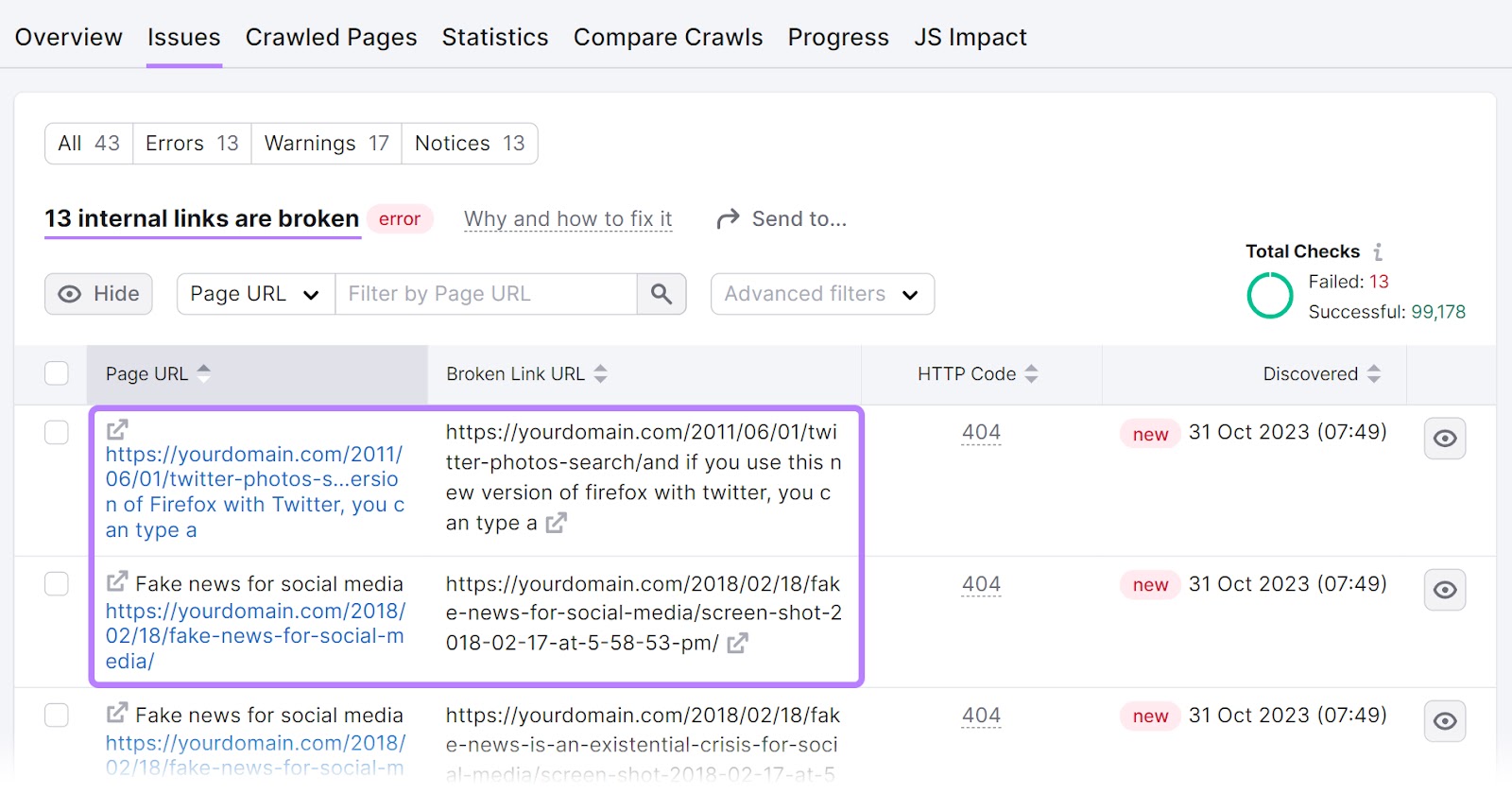

Damaged hyperlinks (hyperlinks that time to pages that may’t be discovered) will also be a motive behind 404 errors. So, let’s see how we will rapidly establish damaged hyperlinks with the Web site Audit instrument and repair them.

Fixing Damaged Hyperlinks

A damaged hyperlink factors to a web page or useful resource that doesn’t exist.

Let’s say you’ve been engaged on a brand new article and wish to add an inside hyperlink to your about web page at “yoursite.com/about.”

Any typos in your hyperlink will create damaged hyperlinks.

So, you’ll get a damaged hyperlink error should you’ve forgotten the letter “b” and enter “yoursite.com/aout” as a substitute of “yoursite.com/about.”

Damaged hyperlinks might be both inside (pointing to a different web page in your web site) or exterior (pointing to a different web site).

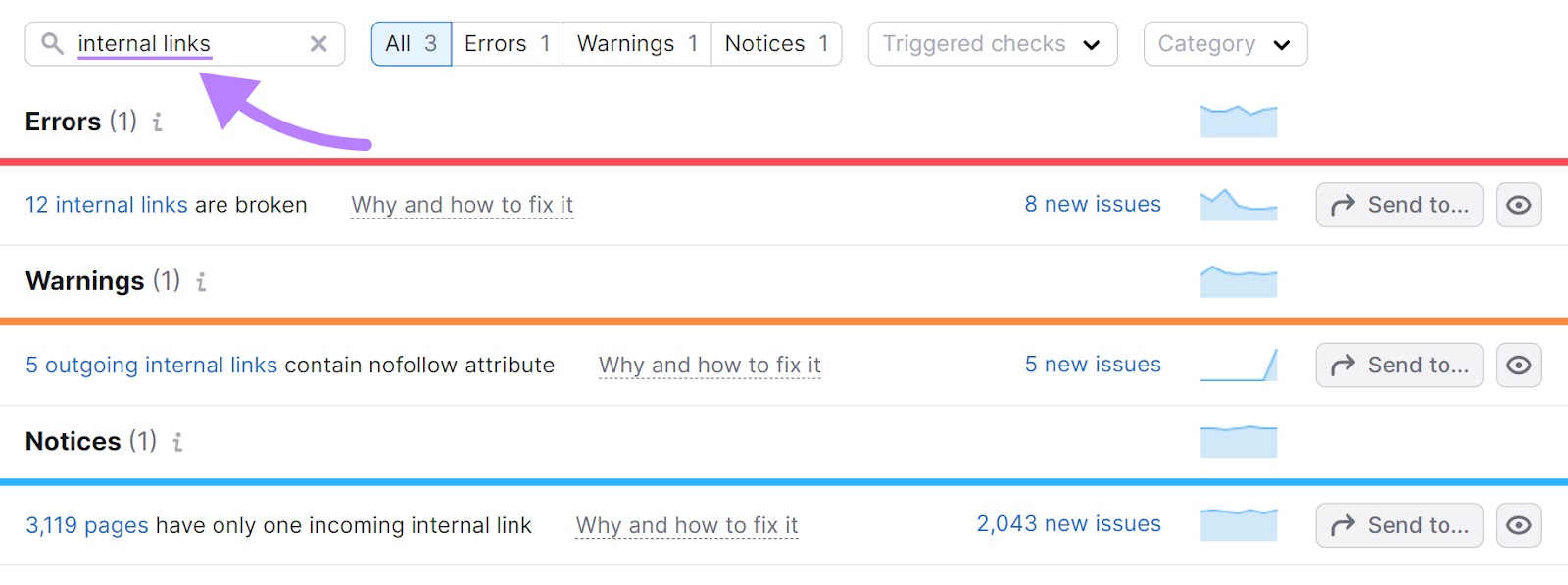

To search out damaged hyperlinks, configure Web site Audit if you have not but.

Then, go to the “Points” tab.

Now, sort “inside hyperlinks” within the search bar on the high of the desk to search out points associated to damaged hyperlinks.

And click on on the blue, clickable textual content within the situation to see the entire listing of affected URLs.

To repair these, change the hyperlink, restore the lacking web page, or add a 301 redirect to a different related web page in your web site.

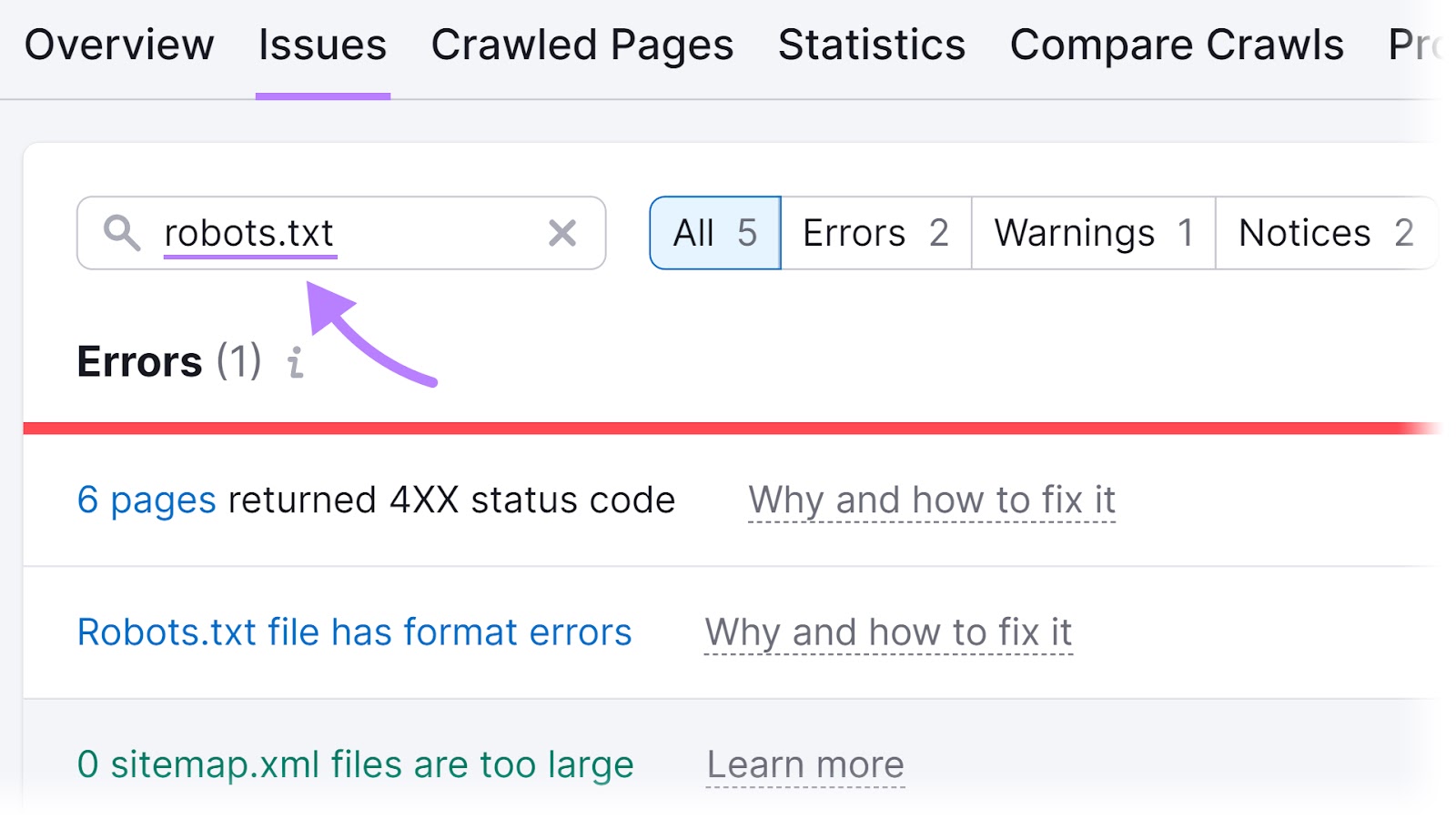

Fixing Robots.txt Errors

Semrush’s Web site Audit instrument may also enable you resolve points concerning your robots.txt file.

First, arrange a undertaking within the instrument and run your audit.

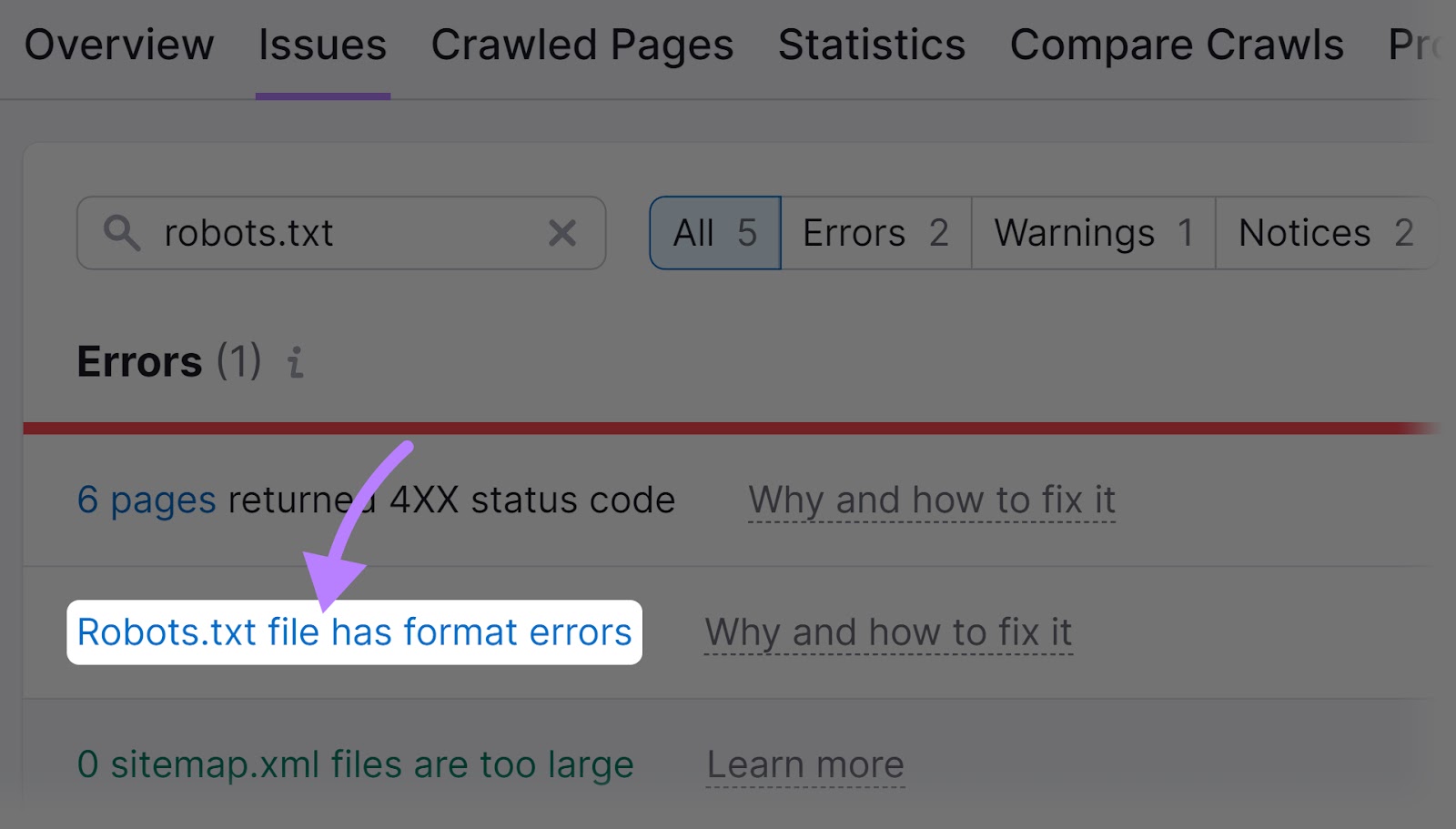

As soon as full, navigate to the “Points” tab and seek for “robots.txt.”

You’ll now see any points associated to your robots.txt file you could click on on. For instance, you may see a “Robots.txt file has format errors” hyperlink if it seems that your file has format errors.

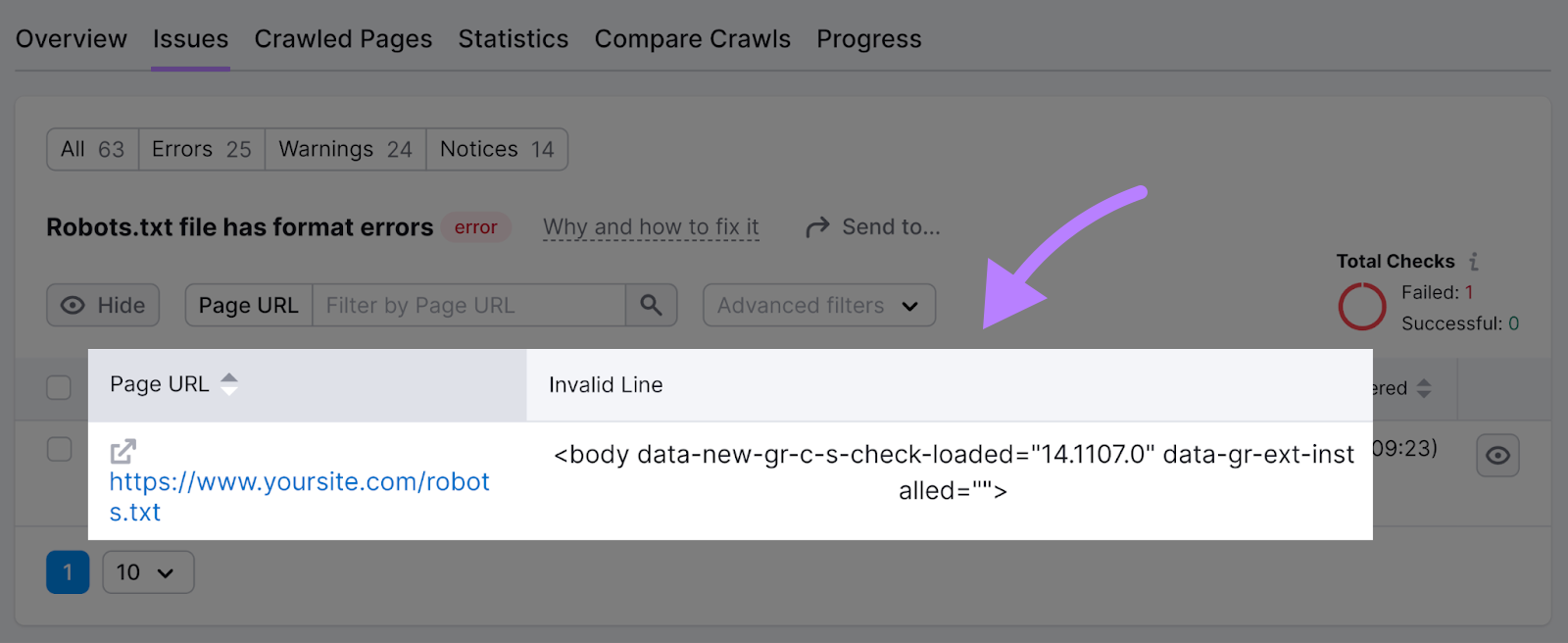

Go forward and click on the blue, clickable textual content.

And also you’ll see an inventory of invalid strains within the file.

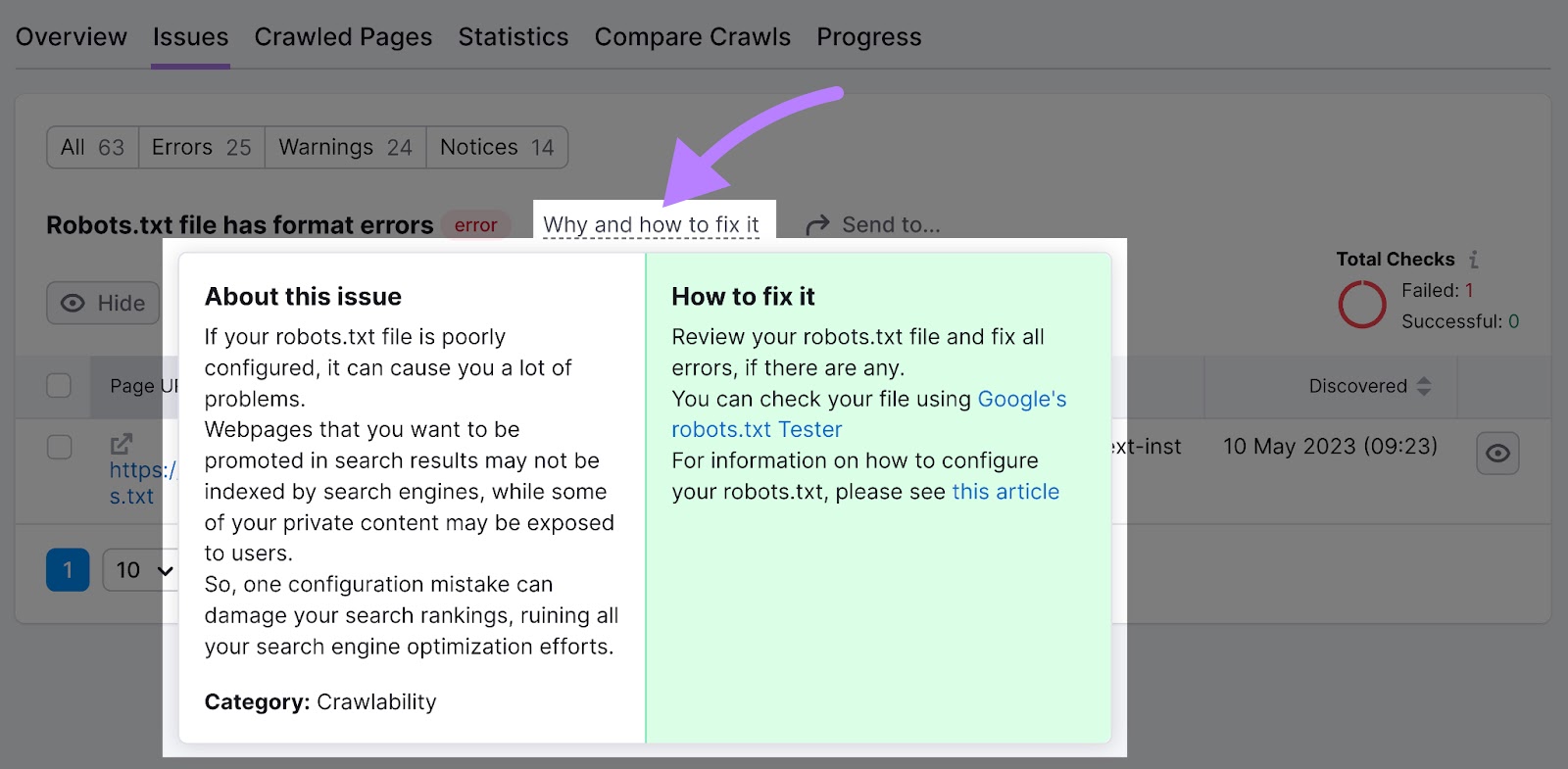

You’ll be able to click on “Why and learn how to repair it” to get particular directions on learn how to repair the error.

Monitor Crawlability to Guarantee Success

To ensure your web site might be crawled (and listed and ranked), it is best to first make it search engine-friendly.

Your pages won’t present up in search outcomes if it is not. So, you received’t drive any natural site visitors.

Discovering and fixing issues with crawlability and indexability is straightforward with the Web site Audit instrument.

You’ll be able to even set it as much as crawl your web site robotically on a recurring foundation. To make sure you keep conscious of any crawl errors that should be addressed.

[ad_2]

Supply hyperlink

About us and this blog

We are a digital marketing company with a focus on helping our customers achieve great results across several key areas.

Request a free quote

We offer professional SEO services that help websites increase their organic search score drastically in order to compete for the highest rankings even when it comes to highly competitive keywords.

Subscribe to our newsletter!

More from our blog

See all postsRecent Posts

- What Is It & Tips on how to Do It December 7, 2023

- What It Is and The way to Use It December 7, 2023

- High 10 Content material Repurposing Instruments for 2024 December 7, 2023